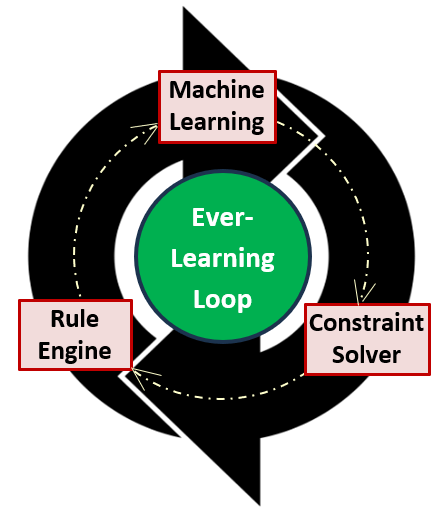

After listening to the latest talk of Prof. Bob Kowalski on What is AI?, I remembered his talk about logical AI at the joint session of DecisionCAMP and RuleML+RR in 2017 in London. It also reminded my own prediction about “What is the next “killer” application for Decision Management?” at that time. Here is what I wrote about a Decision Reasoner in 2017:

Continue readingCategory AI

Machine Learning inside Decision-Making Applications: Practical Use Cases

Machine Learning (ML) tools have been successfully used for decision-making applications for many years. Despite many success stories, ML popularity in enterprise-level software for years remained incomparable with commonly used Rule Engines or even with Optimization tools. Why? Until recently some application developers considered ML to be “too scientific” or unstable with rarely guaranteed results, others complained that it required too much data for practical applications. Nowadays, when Generative AI dominates most technological news and many populists use the terms “AI” and “ML” almost as synonyms, the situation is changing. Vendors and practitioners, who professionally develop decision intelligence software, see a growing interest in ML tools as enterprise developers want to add AI to their existing decision-making applications.

Continue readingSanity Checkers for AI-based Decisions

“When it comes to AI, expecting perfection is not only unrealistic, it’s dangerous.

Responsible practitioners of machine learning and AI always make sure

that there’s a plan in place in case the system produces the wrong output.

It’s a must-have AI safety net that checks the output,

filters it, and determines what to do with it.”

Cassie Kozyrkov, Chief Decision Scientist, Google

“When we attempt to automate complex tasks and build complex systems, we should expect imperfect performance. This is true for traditional complex systems and it’s even more painfully true for AI systems,” – wrote Cassie Kozyrkov. “A good reminder for all spheres in life is to expect mistakes whenever a task is difficult, complicated, or taking place at scale. Humans make mistakes and so do machines.”

Like many practitioners who applied different decision intelligence technologies to real-world applications, I can confirm the importance of this statement. I also can share how we dealt with the validation of automatically made decisions in different complex decision-making applications.

Continue readingGenerative AI at DecisionCAMP

As the Chair of DecisionCAMP-2023, I published my notes from this major annual decision-management event. This year was dominated by the “huge elephant in our decision modeling kitchen”: Generative AI. Contrary to many other conferences that discuss this explosive technology in general, the Decision Management Community deals with very specific real-world problems and has a well-established standardized infrastructure for their practical solutions. So, we have good ideas where exactly to apply constantly advancing ChatGPT, LLMs, and other Generative AI tools.

Continue readingHelping ChatGPT to Build a Working Decision Model

These days only lazy people don’t write about ChatGPT and large language models (LLM). Vendors are trying to be the first to announce a ChatGPT integration even when they don’t have anything serious to show. I’ve also written about it: see “ChatGPT Producing Simple Decision Models” and “LLM and Decision Modeling“. This weekend I decided to help ChatGPT (that is now at GPT-4) to address the Challenge “Permit Eligibility” published by DMCommunity.org. It has a simple rule: “An applicant is eligible for a resident permit if the applicant has lived at an address while married and in that time period, they have shared the same address at least 7 of the last 10 years.” But this rule contains several tricky assumptions – no wonder, DM vendors are not in a hurry to submit a solution.

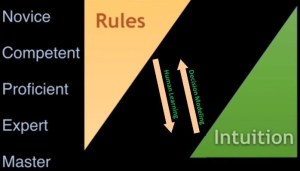

Continue readingWe Know More Than We Can Tell

Living through the ChatGPT boom, it is interesting to read this article “Is ChatGPT Aware?“:

“Polanyi’s paradox, named in honor of the philosopher and polymath Michael Polanyi, states that “we know more than we can tell.” He means that most of our knowledge is tacit and cannot be easily formalized with words. In The Tacit Dimension, Polanyi gives the example of recognizing a face without being able to tell what facial features humans use to make such a distinction.“

It brings back some of my related thoughts on “Business Rules and Tacit Knowledge” from 7 years ago. It described how “Human Learning” and “Decision Modeling” were moving in opposite directions. Will we see a change?

LLM and Decision Modeling

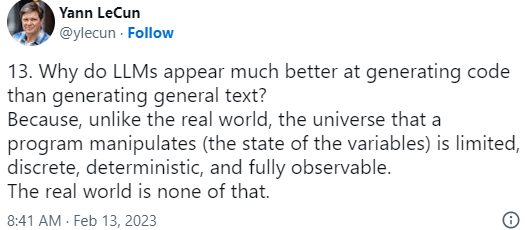

ChatGPT has the public excited, but the experts are reserved in their praise. Thinking about a practical application of the Large Language Models (LLM) to decision modeling this quote from LeCun caught my attention:

When we create practical decision models we usually deal with an even more limited “universe”. A decision model “manipulates the state of the decision variables” within a very specific business domain (insurance, loan origination, claims, medical guidelines, etc.) complemented by generic concepts well covered by such relatively small standards as DMN and SBVR. Decision modeling universe is really “limited, discrete, deterministic, and fully observable”.

So, being cautious about the current ChatGPT’s hype, we may be more optimistic about the next breakthrough in Decision Modeling. I suspect the answers of experts to my DecisionCAMP-2022 question “Are our Rule Engines Smart Enough?” would be different today.

ChatGPT Producing Simple Decision Models

I asked ChatGPT to generate a decision model for a simple Vacation Days problem. Here are two results:

Continue reading