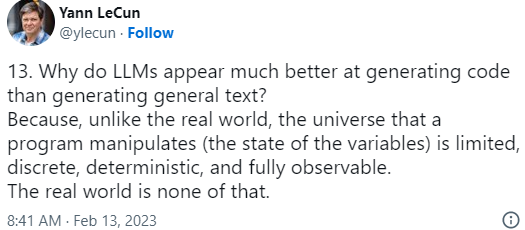

ChatGPT has the public excited, but the experts are reserved in their praise. Thinking about a practical application of the Large Language Models (LLM) to decision modeling this quote from LeCun caught my attention:

When we create practical decision models we usually deal with an even more limited “universe”. A decision model “manipulates the state of the decision variables” within a very specific business domain (insurance, loan origination, claims, medical guidelines, etc.) complemented by generic concepts well covered by such relatively small standards as DMN and SBVR. Decision modeling universe is really “limited, discrete, deterministic, and fully observable”.

So, being cautious about the current ChatGPT’s hype, we may be more optimistic about the next breakthrough in Decision Modeling. I suspect the answers of experts to my DecisionCAMP-2022 question “Are our Rule Engines Smart Enough?” would be different today.