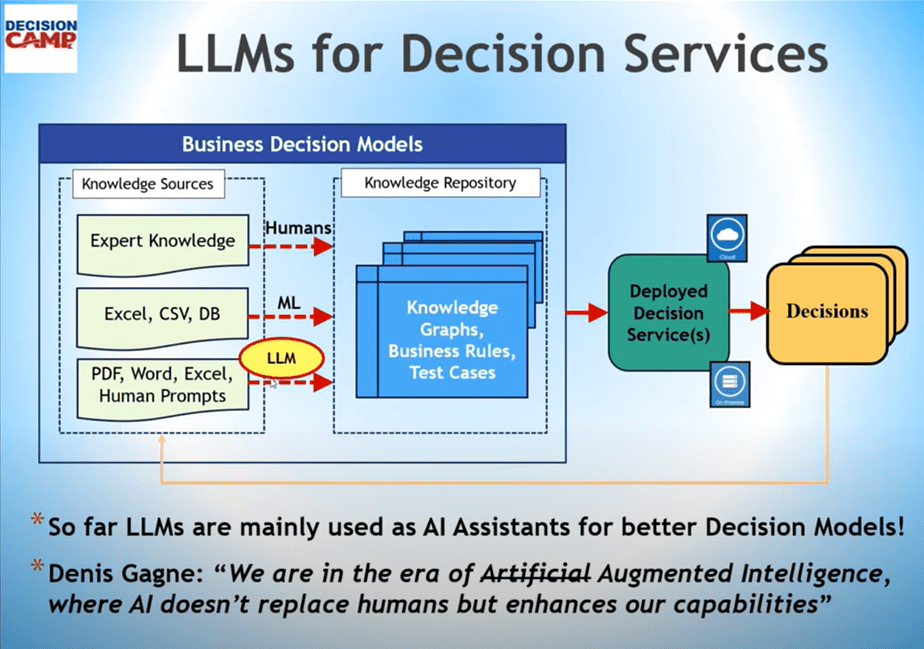

In this LinkedIn post, I compared the 2003 and 2026 results of using LLMs to generate decision models from plain English descriptions. Here is the conclusion:

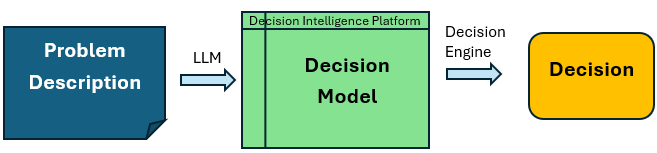

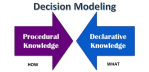

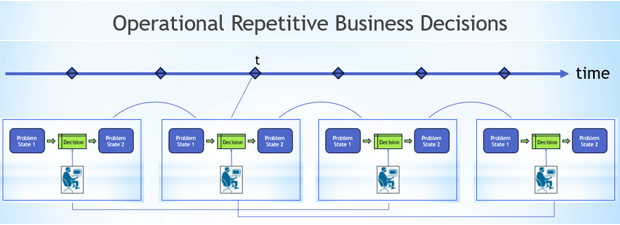

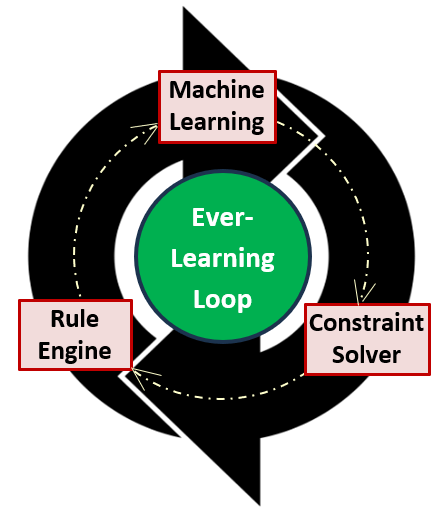

Three years ago, I was cautious about judging newly introduced generative AI capabilities. Today, even these two examples demonstrate that LLMs can be really good at transforming relatively simple business logic, while they struggle with complex, intersecting conditions. Still, the progress is undeniable. Modern LLMs can take a plain English description of a well-specified business function and generate a reasonably good initial decision model complete with glossaries, business rules, and test cases. These models can then be refined using business-friendly graphical interfaces and executed via decision engines to determine optimal or good decisions. We will undoubtedly see such capabilities, with significantly improved quality, integrated into Decision Intelligence platforms sooner than one might expect.